Biometric algorithms enable your body to speak through immersive technology. Is this the next phase of human-machine integration?

“It’s pretty common knowledge that body language plays a big role in how we communicate in real-life, and the fact is that behavioral studies have been validating the correlation between the body and mind for decades.” explains Amir Bozorgzadeh, Cofounder and CEO at Virtuleap. “In the setting of spatial computing, the whole game elevates as all of our intuitions about non-verbal expression are suddenly given a voice.”

Virtuleap is a Lisbon-based startup, a Boost VC Tribe 11 company and a member of the CMS equIP startup program. They initially began developing their API to cater towards accessibility needs, broadening the user base of immersive content adapting for inclusiveness and maximum user comfort; like an increase of color contrast or adjustment of font size through individual user preferences.

That human-centric inclusive angle gradually evolved into incorporating scientific approaches such as neuropsychology and fractal analysis, which has now become what the team considers to be first the native metrics for human-centric research, design, and marketing for the spatial computing era.

The team leveraged modern neuroscience research to create mathematical models that infer emotional states based on your subliminal and unconscious body postures, gestures, and motion in immersive environments. For the next phase of development, they are now looking to stress-test the algorithms by feeding the machine learning with data from a consumer-facing app. The result is the Attention Lab – an Oculus Go “edutainment” app which recently launched in beta – featuring a series of games and challenges that track user interaction.

According to Bozorgzadeh, the concept is a mix of popular VR apps such as Valve’s The Lab and Owlchemy Labs’ Job Simulator, and non-VR games like Lumosity and Cognifit, which are focused on cognition and user attention. It features a series of gamified experiments that capture user cognition in VR. Each experience is powered by a series of biometric algorithms that translate subliminal body postures, gestures, and motion in VR, AR, and 3D environments into meaningful insights about the human condition.

“While players get to enjoy challenging their cognitive abilities, such as memory and concentration, the real benefit of the Attention Lab is access to an unprecedented depth of self-knowledge and psychographics that is enabled thanks to the new data points captured by spatial computing devices.”

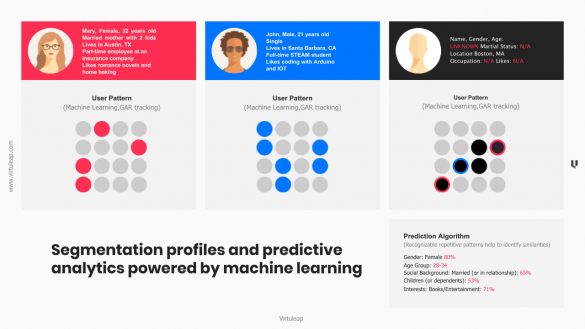

With machine learning, the mathematical models will become more accurate over time by allowing the API to track and map physical, physiological and psychological “segmentation” profiles in order to build a database that can generate predictive analytics. For example, the API will be able to anticipate the profile of an anonymous user based on their behavioral cues by calculating probabilities on their likely psychographic and demographic profile.

“If it walks like a duck, swims like a duck, and quacks like a duck, then our machine learning calculates the probability of how likely that otherwise anonymous user is a duck.” explains Roland Dubois, Cofounder and CXO at Virtuleap. “Everything we capture in the form of meaningful insights would otherwise require a traditional survey or user interview in order to capture.”

While players get to enjoy challenging their cognitive abilities, such as memory and concentration, the real benefit of the app is access to an unprecedented depth of self-knowledge.

“Players will get to learn about how their brains work and all sorts of insights about their neuroscience, not just about themselves specifically, but also in how it relates to the neuroscience of their friends and the community at large.” said Dubois. “Each experience will present different famous experiments that will, in turn, allow them to learn about how they react and respond in different situations. Insights that might just blow their minds.”

The Attention Lab is currently featured on the Kaleidoscope VR platform and is set to launch on the Oculus Store Q2 2019. In parallel, the API will be made publicly available on an online platform later in the year. Virtuleap is currently accepting pilot campaigns with brands and companies in both the consumer and enterprise sectors in order to create what they are calling “template models” that will allow their biometric algorithms to be relevant for content across a range of different categories.

Quelle:

Foto: The Attention Lab / Image Credit: Virtuleap

https://vrscout.com/news/xr-attention-lab-body-language/